Setup Kubernetes

c2d-ks1.Categories:

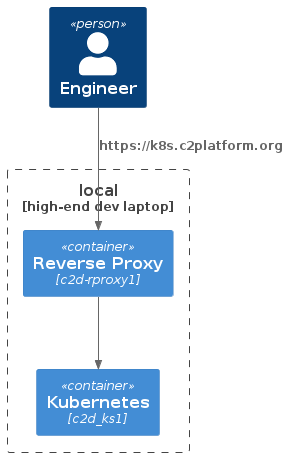

This how-to describes creation and management of Kubernetes cluster / instance c2d-ks1 based on MicroK8s

. See the GitLab project c2platform/ansible for more information.

Overview

Prerequisites

Create the reverse and forward proxy c2d-rproxy1.

c2

unset PLAY # ensure all plays run

vagrant up c2d-rproxy1

For more information about the various roles that c2d-rproxy1 performs in this project:

- Setup Reverse Proxy and CA server

- Setup SOCKS proxy

- Managing Server Certificates as a Certificate Authority

- Setup DNS for Kubernetes

Ansible play

The Ansible play is plays/mw/mk8s.yml. This play incorporates two roles related to Kubernetes:

c2platform.mw.microk8sis used to install MicroK8s.c2platform.mw.kubernetesis used to manage the MicroK8s cluster.

This Ansible configuration is in two files:

group_vars/mk8s/main.ymlgroup_vars/mk8s/kubernetes.yml

Provision

To create first Kubernetes node c2d-ks1:

vagrant up c2d-ks1

As it relates to Kubernetes the mk8s.yml play will perform the following steps:

- Install MicroK8s and add

vagrantto themicrok8sgroup. - Enable

dns,registry,dashboardandmetallbadd-on. - Plugin

metallbis configured with IP range1.1.4.10-1.1.4.100. - Configure

dnsadd-on with1.1.4.203:5353as the only DNS server.

Verify

Cluster status

To check status of the cluster:

vagrant ssh c2d-ks1

microk8s status --wait-ready

This should output status information that shows the cluster is up and running.

Show me

[:ansible-dev]└2 master(+3/-3,1)* 1 ± vagrant ssh c2d-ks1

Welcome to Ubuntu 22.04.1 LTS (GNU/Linux 5.19.0-32-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

Last login: Tue Feb 28 06:40:07 2023 from 10.84.107.1

vagrant@c2d-ks1:~$ microk8s status --wait-ready

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

dashboard # (core) The Kubernetes dashboard

dns # (core) CoreDNS

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

hostpath-storage # (core) Storage class; allocates storage from host directory

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

metrics-server # (core) K8s Metrics Server for API access to service metrics

minio # (core) MinIO object storage

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000

storage # (core) Alias to hostpath-storage add-on, deprecated

vagrant@c2d-ks1:~$

Dashboard

Verify the dashboard is functional with curl shown below. This should output dashboard HTML.

curl https://1.1.4.155/ --insecure

With the Kubernetes Dashboard up and running you should be able to navigate to https://k8s.c2platform.org/ and login using a token. The value of the token you can get with commands below.

vagrant ssh c2d-ks1

kubectl -n kube-system describe secret microk8s-dashboard-token

See Setup the Kubernetes Dashboard for more information.

DNS

CoreDNS is configured to use a custom DNS server running on c2d-rproxy. You can verify using dig that gitlab.c2platform.org is resolvable. See Setup DNS for Kubernetes for more information.

Next steps

GitLab GitOps

Deploy simple application using a GitOps workflow see Setup a GitLab GitOps workflow for Kubernetes.

Helm, kubectl

On c2d-ks1 kubectl, helm and helm3 are available and configured to interact with the cluster.

Show me

vagrant@c2d-ks1:~$ helm version

version.BuildInfo{Version:"v3.9.1+unreleased", GitCommit:"0b977ed36f2db4f947a7a107fc3f5298401c4a96", GitTreeState:"clean", GoVersion:"go1.19.5"}

vagrant@c2d-ks1:~$ helm3 version

version.BuildInfo{Version:"v3.9.1+unreleased", GitCommit:"0b977ed36f2db4f947a7a107fc3f5298401c4a96", GitTreeState:"clean", GoVersion:"go1.19.5"}

vagrant@c2d-ks1:~$

vagrant@c2d-ks1:~$ kubectl get namespaces

NAME STATUS AGE

kube-system Active 3h35m

kube-public Active 3h35m

kube-node-lease Active 3h35m

default Active 3h35m

container-registry Active 3h34m

metallb-system Active 3h34m

gitlab-agent-c2d Active 3h30m

gitlab-runners Active 3h30m

nja Active 3h30m

njp Active 3h30m

vagrant@c2d-ks1:~$

Known issues

missing profile snap.microk8s.microk8s.

MicroK8s node c2d-ks1 randomly fails to produce working Kubernetes. The command microk8s status --wait-ready will show message

missing profile snap.microk8s.microk8s. Please make sure that the snapd.apparmor service is enabled and started

Show me

vagrant@c2d-ks1:~$ microk8s status --wait-ready

missing profile snap.microk8s.microk8s.

Please make sure that the snapd.apparmor service is enabled and started

vagrant@c2d-ks1:~$ sudo systemctl status apparmor.service

● apparmor.service - Load AppArmor profiles

Loaded: loaded (/lib/systemd/system/apparmor.service; enabled; vendor preset: enabled)

Active: active (exited) since Mon 2023-03-06 04:43:26 UTC; 20min ago

Docs: man:apparmor(7)

https://gitlab.com/apparmor/apparmor/wikis/home/

Main PID: 99 (code=exited, status=0/SUCCESS)

Mar 06 04:43:26 c2d-ks1 apparmor.systemd[99]: Not starting AppArmor in container

To fix this run commands

sudo apparmor_parser --add /var/lib/snapd/apparmor/profiles/snap.microk8s.*

sudo reboot now

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.